Introduction

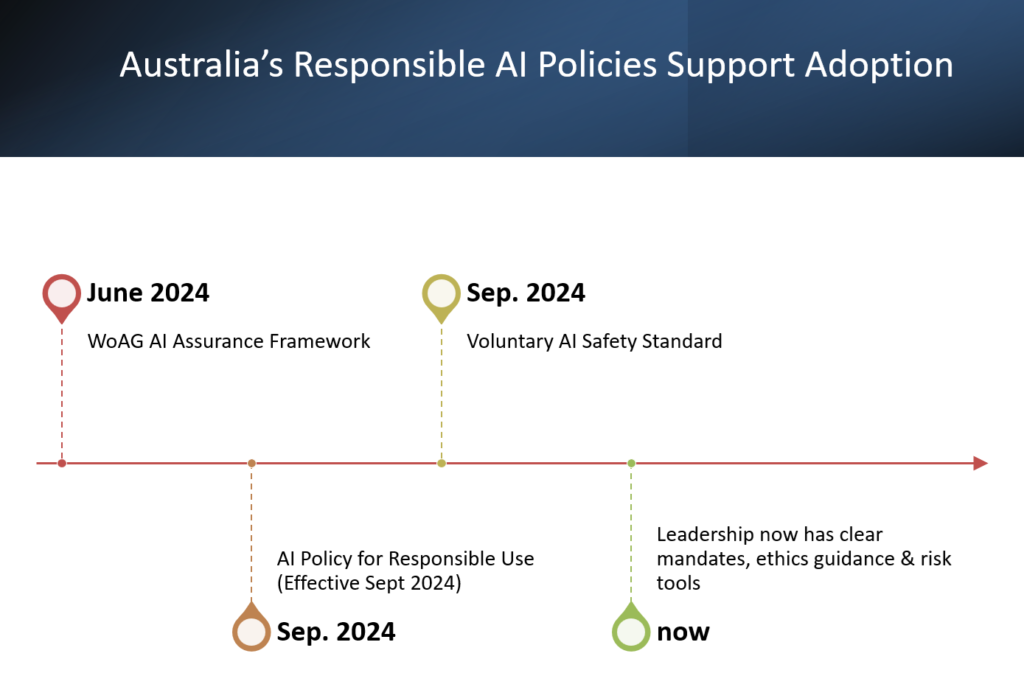

Generative AI (GenAI) is rapidly reshaping public-sector digital transformation by automating content creation, augmenting decision-making, and freeing staff from routine tasks. Combining GenAI with traditional automation and human judgment “can make government more efficient and more effective, while the UK Government observes that AI is already helping civil servants “spend less time on repetitive tasks” and deliver services “smarter, faster, and more responsive. In this context, Australia’s federal government has released comprehensive guidance to harness GenAI safely. The Whole-of-Australian-Government (WoAG) AI Assurance Framework (June 2024) establishes core cornerstones of AI assurance to embed ethical principles into AI deployment. Complementing this, the Policy for the Responsible Use of AI in Government (Sept 2024) mandates accountability (e.g. appointing AI “accountable officials” and publishing transparency statements) and alignment with ethics and privacy laws. Together, these WoAG guidelines provide a policy-aware foundation for agencies to leverage GenAI for increased efficiency, strategic advantage, and improved service delivery.

Brief of WoAG AI Guidelines

The National AI Assurance Framework (June 2024) identifies Five cornerstones of AI assurance that government agencies must consider:

- Governance (and Data Governance): Organizational structures, policies, roles and training that ensure AI is used “ethically and lawfully,” with clear accountability and risk management. At the agency level, leadership must commit to AI oversight, training staff, and adapting decision processes to include AI outputs. This includes strong data governance to ensure AI training data is “authenticated, reliable, accurate and representative”.

- A Risk-Based Approach: Agencies should assess each AI use case individually, applying rigorous testing and monitoring in high-risk scenarios while allowing lighter processes for low-risk applications. Risk assessments cover the entire AI lifecycle (from design to deployment) and inform the level of assurance (e.g. red-teaming, external review bodies) needed.

- Standards Alignment: Wherever practical, agencies should adopt relevant AI standards (such as AS ISO/IEC 42001, 23894, 38507) for governance and risk management to ensure consistent, interoperable implementation of AI. This also includes adherence to existing data and cybersecurity standards, as well as updates from Standards Australia on new AI norms.

- Procurement Practices: Procuring AI systems requires special safeguards. Contracts should explicitly incorporate AI ethics principles (e.g. fairness, transparency, accountability) and define performance testing, data access, and vendor responsibilities. Agencies must build AI capability internally and avoid vendor lock-in by requiring knowledge transfer and ongoing support in contracts.

- Oversight in Generative AI: An explicit focus in the framework is on generative AI tools. Agencies are directed to follow additional guidance that emphasizes human oversight of AI-generated content, protection of sensitive data, and clear traceability of AI use.

References – https://www.finance.gov.au/sites/default/files/2024-06/National-framework-for-the-assurance-of-AI-in-government.pdf